Some additional results of my tests to add a big number of books (52,000) to calibre.

Moving DB and temporary directory to ramdisk does not show a speed up. Looking to the import after 20,000 books shows, calibre-debug uses 100% CPU (it uses always 100% CPU), calibre-parallel uses ~0%. I would like to know how much time is used within pythons garbage collector.

Calibre debug output is at ~6MB. ~30,000 books (1836 MB) left in the temporary add_books directory of calibre. Dict.txt (of GetFilename Plugin) does not change any more: 52,966 lines, size: 1.8MB (using a very short path for the input files).

I tried to cut the calibre debug output to 0 byte with cat /dev/null > tmp(debugout).txt, but it grows up to the full size immediately.

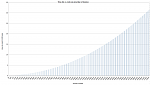

To find out in how many jobs I should divide the big job, I make the following chart, it shows how many hours i will use for 52,000 books depending on the the book count of the small jobs: